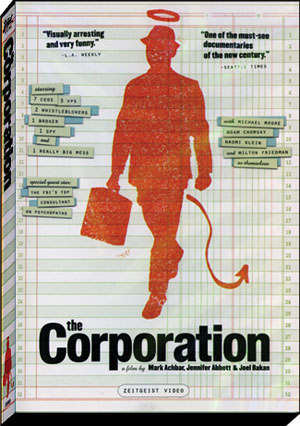

For decades the term “Corporate America” has been bandied about. But what does that mean, exactly? Does the term have the same meaning from person to person? Do we know what we’re talking about when we use the term? Does the person we’re talking to have the same understanding? These are challenging but important questions to explore.

For better or worse, corporations play a major role in our lives, our government, and the world. Yet, how well do we understand the corporation as a social institution? In history class, we might learn about things like the breakup of Standard Oil or major environmental events like DDT or the Exxon Valdez. And we might occasionally hear news about a lawsuit or controversy involving this or that company.

But given the great influence large corporations now seem to have in the world, all of us would do well to gain a better understanding of this institution. And this excellent documentary can help.

One viewer said that watching this documentary is like taking a class (but much more enjoyable). It covers really basic questions like: What is a corporation? How did they arise and evolve over time? It goes on to cover more complex queries like: What role do corporations play in society? How do businesses balance their profit motive with the public good? Do corporations have a duty to be socially responsible?

Do corporations have a duty to be socially responsible?